On the envisioned way towards autonomous agents powered by large language models (LLMs), the Model Context Protocol (MCP) is currently one of the hottest topics out there. At conferences, panels, or coffee-fueled meetups, everyone talks about MCP-backed agentic systems giving an overall impression of agents being already close to self-awareness and self-evolvement - ready to solve all your business problems out-of-the-box in just a couple of minutes.

But before we all sign up for a lifetime pass on this high-speed ride and hand over the keys to our businesses, let’s take a moment to look out the window and ask ourselves:

What powers this hype? Beyond the buzzwords and the demo videos with a lot of text being generated at high speed, what’s happening under the hood of these MCP empowered systems and what exactly are the reasons we need them? How does MCP embed into the existing LLM technology landscape and what are use cases and concrete applications? What are challenges with the current state of MCP regarding e.g. security, adoption, and maturity?

We try to answer these questions step-by-step in the scope of our own series of articles about MCP, starting herein with a practice-oriented introduction to the topic. So, buckle up, grab your popcorn, and let’s ride the line between the hype and reality - and see where MCP fits in.

Not so long ago, the first LLM-based applications were published and have quickly become part of both business and personal workflows. The probably best-known example for this is ChatGPT by OpenAI with its underlying GPT models.

During inference of such an LLM, when it produces its output/response, it can only “see” the tokens (or text in layman’s terms) placed in its context window. It is the model’s short-term memory consisting of the current prompt, the included conversation history, a system prompt and/or “additional context”. The additional context is information which is included from sources outside the knowledge of the LLM being produced by tools the model can call, e.g. retrieval from knowledge repositories, a python script or internet search.

For an LLM, the right context is thus essential for generating relevant, reasonable, and accurate responses. Otherwise, it would sometimes only be guessing, a phenomenon generally described as hallucinating.

Since these first LLM-based applications, a lot of new models, each relying on proper context, have emerged. In parallel, a wide range of novel applications built on top of LLMs have appeared. But as we see in our projects the same use cases often come with a huge variety of implementations. A popular use case among our customers and a useful example of this involves applications that retrieve information or generate summaries from company files – capabilities typically embodied by what are known as Retrieval Augmented Generation (RAG) systems. However, the flexibility in how these, or LLM-based applications more generally, are implemented often leads to multiple problem areas at once, with some of them being:

This is precisely where MCP comes into play, introduced by Anthropic in 2024:

A common standard interface defining communication and data flow, avoiding tool/resource-specific implementations and redundancies, and enabling plug-and-play simplicity in connection to LLMs. MCP can thus be seen as analogous to well-known technical standards - e.g. Wireless-LAN or USB-C - and enriches the context of an individual LLM by providing additional context via utilities in a generalized way. These utilities, which are called MCP servers, are just built once and can be used everywhere, effectively leading to a growing list of pre-built plugins.

Imagine a multinational manufacturing company with several production facilities across regions, all connected via an ERP system for inventory and purchasing as well as via databases for historical production trends. The company’s operations team wants to make smarter and faster decisions using AI-powered assistance — but with responses grounded in actual production and supply chain data lying in different sources.

A business scenario could be that a supply chain manager opens the company’s chat interface and asks: “What is the current production utilization in Germany, and what are the consequences for inventory management and purchasing?”.

Instead of a generic response, the assistance tool gives a clear, actionable answer:

“The current production utilization of the plants in Germany is 85%, compared to 78% last week. At this rate, raw material stocks are expected to be fully consumed in 5 days. The current lead time for purchasing is 7 days on average, indicating a potential shortfall unless orders are ramped up. Suggest adjusting purchasing triggers or rescheduling non-priority production lines.”

This is a typical use case being realized by the implementation of a RAG system which we currently experience a lot – a virtual chat assistant based on LLMs which are using actual company systems to perform their tasks, applying the available company information as foundational context critical to effective task completion.

As foundation of the implementation of such a RAG system, we could now integrate the company systems by custom logic and API calls. Firstly, this needs time, knowledge about the systems and communication with system responsible contacts about the respective integration. Secondly, we might have to take infrastructure topics, e.g. performance and costs, and security aspects into consideration. Lastly, we also must ensure maintainability and long-time compatibility regarding the integrated systems.

This is where MCP steps in and unfolds its advantages: It requires one MCP server for each of the underlying source systems in scope (in this scenario the ERP system and databases providing crucial additional context information of the company) which need to be implemented once with respect to the MCP standard and can then be provided locally or via cloud. The MCP then enables plug-and-play usage in each individual application without the necessity of considering all the topics mentioned above again.

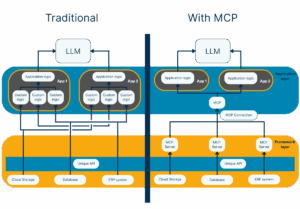

For two exemplary applications (App 1 and App 2), this is shown in the figure below. The custom logic required for the connection of the different technologies is reduced drastically within the application layer based on the introduction of MCP. It is shifted to the framework layer with the technology owners being responsible for the MCP server implementations as their business logic knowledge resides there.

System design of two applications - traditional vs. with MCP.

This means for the given use case that as soon as the ERP system and database MCP servers are realized, the individual implementation and integration effort for these systems minimizes. They can be easily reused organization-wide and can be maintained and scaled centrally with a clear separation of concerns and modularity. This reduces the overall effort for the realization of the RAG system significantly for our multinational manufacturing company, and potentially for all its future RAG systems as well.

Originating in its core structure, the MCP concept naturally enables more autonomous systems which apply MCP servers as required to fulfill a task. These systems - called agents - can not only use the additional context but also react to it, plan multi-step solutions, make decisions and interact with their environment or other agents in a goal-directed manner.

To facilitate interaction and coordination among agents, several protocols have been developed. Examples of such protocols include the Agent Communication Protocol (ACP), introduced by IBM, which enables messaging between agents in local environments or shared runtime with a focus on privacy, observability, and lightweight operation. Another example is Google’s Agent-to-Agent (A2A) Protocol, which supports real-time and event-driven exchanges across domains and cloud environments in a dynamic and loosely coupled way. Lastly, the open-source Agent Network Protocol (ANP) provides a decentralized network layer for agentic ecosystems across the open internet designed for a future of agent marketplaces or similar.

ACP, A2A, and ANP address different scopes of inter-agent communication and thus empower agents to talk to each other. In contrast, MCP acts as a standardized context layer for an individual agent and focuses on providing its internal capabilities to work with different tools and data in a structured way to reason, plan, and act effectively.

We have now reached our first station on the hype train journey, but the next stops will be even more exciting. In this article, - its general approach and advantages, a use case example, and its context to agentic systems.

Article 2, we will make a deep dive into the structure and concepts of MCP. What do terms like “MCP host,” “MCP client,” and “MCP server” really mean and how do they come together? What is the maturity status of the MCP regarding e.g. security and adoption, and where do we see some room for improvement? Think of it as the blueprint behind the protocol - revealed.

Then in Article 3, we will conduct a little reality check. You will see how we, the HMS team, are already applying MCP to streamline operations, to implement or enhance customer applications, and in the end to deliver smarter services. Expect practical insights, code snippets, and even a glimpse into our proprietary code migration tool and some customer projects.

In Article 4, we will then focus on agentic systems again, starting with the protocols which were already briefly referenced earlier in this article. How do they work in detail? Are they complementary resulting in synergies or are they in competition, working against each other? What are the individual advantages and disadvantages, use cases, and general developments on the market?

The foundation is set. The architecture is the next stop.