Digital sovereignty has long been more than an abstract political debate. It is increasingly becoming a key requirement for modern IT architectures. Those designing analytics and AI platforms face fundamental questions: Where is the data stored? Who controls access? What dependencies arise through software, vendors, and licensing models? And how can these decisions be reconciled with requirements for agility, cost efficiency, and scalability?

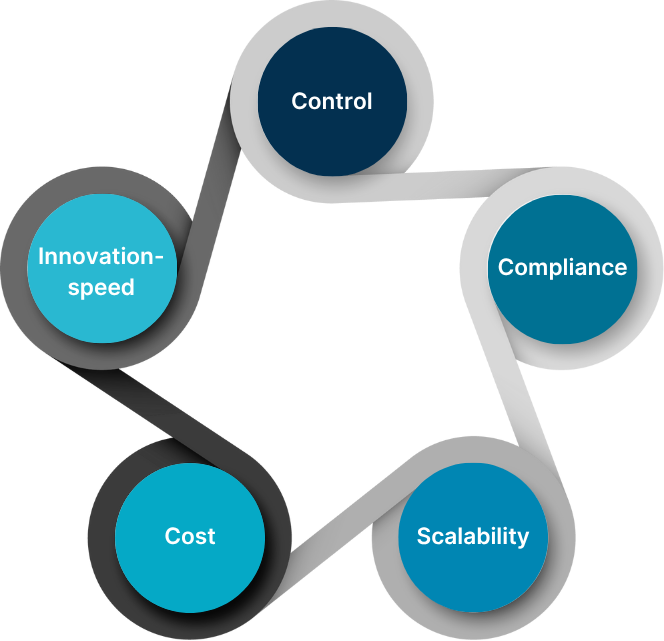

At HMS, we address these questions across five dimensions: control, compliance, scalability, cost, and speed of innovation. They form the framework within which architectural decisions can be structured and prioritized.

Figure 1: Five dimensions for classifying IT architectures.

In this blog article, we introduce the five dimensions of our approach. Using selected projects, we show how companies have worked with HMS to develop long-term sustainable solutions. Each customer’s specific conditions were taken into account, and tailored approaches were developed.

Many organizations begin their architectural considerations with the desire for maximum control over their IT infrastructure. They want to decide where data is stored, in what format it exists, how it is processed, and which software is used. This sovereignty over systems creates the foundation for compliance: only those who retain technical control can implement data protection, audit compliance, and regulatory requirements consistently and transparently.

Control means not only physical ownership of systems, but also the ability to manage access in a differentiated manner – for example, through Identity and Access Management, Secrets Management, and Policy Enforcement. The more clearly these mechanisms are anchored internally, the greater an organization’s technological independence.

Compliance, in turn, goes far beyond adherence to legal requirements. It also includes internal security policies, auditability, and full traceability of data flows. In regulated industries, compliance has long been a prerequisite for market participation – for example, under ISO 27001, DORA, or NIS2.

However, control and compliance come at a price. Organizations that operate systems entirely on their own must not only provide infrastructure and skilled personnel but also take responsibility for keeping systems secure, current, and available. These tasks are essential, yet they consume capacity – for knowledge building and retention, for instance – that can no longer be devoted to developing new products, services, or analyses.

On the other hand, there is often a strong desire for scalability and availability: the ability to scale compute and storage resources flexibly, distribute systems geographically, and ensure global access with stable performance. Hyperscalers offer this almost out of the box – with globally distributed data centers, built-in redundancy, automatic failover, and guaranteed Service Level Agreements (SLAs).

By contrast, organizations that operate everything themselves must ensure high availability independently – for example, through backup sites, emergency power supplies, replication, and disaster recovery. This is possible, but costly and complex. This highlights a central tension: the more control an organization wishes to retain, the greater the effort required to achieve a comparable level of resilience and elasticity to what cloud providers deliver as standard.

Cloud and on-premises solutions also differ significantly in terms of cost structure. In the cloud, expenses are usage-based – and can quickly spiral out of control if services are misconfigured. On-premises, by contrast, requires substantial upfront investments in hardware, licenses, and personnel. In return, operating costs are usually more predictable and easier to manage.

In both cases, it pays to consider the entire lifecycle. Beyond visible infrastructure costs, hidden expenses also play a role – for example, for training, integration projects, or legal advice on data protection. A thorough Total Cost of Ownership (TCO) analysis is therefore indispensable.

When it comes to innovation speed, the cloud often holds a clear advantage: ready-to-use platforms, tools, and AI models make it possible to implement new ideas within days. On-premises solutions, on the other hand, typically require in-house development, which leads to longer lead times – but also results in less dependency on external vendors.

However, innovation speed is determined not only by infrastructure, but also by how development processes are organized. Continuous Integration (CI), Continuous Delivery (CD), platform capabilities, and standardized interfaces make it easier to integrate new technologies. This continuous, agile iteration process largely determines how fast and innovatively an organization can operate in the market.

These tensions make it clear: there is no single architecture that meets all requirements simultaneously. Many of these dimensions conflict, while others are mutually dependent.

More control can strengthen compliance but may significantly slow innovation. Innovation speed and scalability usually go hand in hand – yet pursuing innovation can create friction with regulatory requirements. And striving for perfect compliance inevitably reduces agility.

Those planning sovereign architectures can never fully resolve these conflicts. The goal, instead, is to balance them consciously – aligned with the organization’s strategic objectives and the conditions in which it operates. These include available budgets, technical and organizational expertise, regulatory demands, industry standards, and leadership’s risk tolerance.

Only when strategy and context are given equal weight can a sustainable, realistic architecture emerge. This is precisely where HMS’s consulting approach comes in: we systematically map an organization’s unique requirements to the five dimensions, derive clear priorities, and then collaborate with clients to make architectural decisions that are both viable today and open to future development.

In practice, three basic models have become established that help organizations navigate the tension between control, compliance, scalability, cost, and innovation speed:

None of these options is inherently better or worse. They mark reference points on a map where each organization must define its own path, depending on which aspects it prioritizes most.

Sovereign Cloud offerings can serve as a complementary option in specific scenarios. These are specialized services from global cloud providers that work with European data trustees or ensure EU-only operations. Their goal is to combine the scalability and service depth of international hyperscalers with local sovereignty. In practice, these models are still rare but can make sense when regulatory requirements have the highest priority while hyperscaler-level functionality is still desired.

Hybrid architectures remain a proven approach: critical workloads stay on-premises, while selected projects use cloud resources. This allows conflicting objectives to be mitigated without fully relinquishing sovereignty.

Every IT architecture arises from its specific context. The following case studies illustrate how organizations under very different conditions have set priorities, weighed decision dimensions, and selected technologies. HMS supported these organizations as a consulting and implementation partner – not with standard solutions, but with architectures precisely tailored to their individual requirements.

This case illustrates two contrasting paths: on one side, maximum sovereignty through a fully on-premises stack; on the other, high innovation speed through the use of existing cloud infrastructures.

Example A: The Sovereign Chatbot of a Financial Institution

Initial situation / context

A large European financial institution wanted to make internal documents searchable using generative AI. The central requirement was that confidential information must never leave the company. Compliance and control were top priorities.

Solution approach

Results & trade-offs

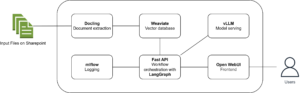

Figure 2: Schematic overview of the system architecture deployed on OpenShift.

Users interact with the GenAI chatbot through an Open WebUI frontend. Agent-based workflow orchestration is managed using LangGraph, deployed via a FastAPI backend. Text data is extracted from SharePoint using Docling and stored in a Weaviate vector database. Large Language Models (LLMs) and embedding models are deployed via vLLM for efficient inference. Centralized logging and tracing are handled using MLflow.

Example B: Innovation Speed with Azure

Initial situation / context

An industrial group also planned to introduce a chatbot to access internal knowledge sources – in this case, over 500,000 research reports. Unlike the financial institution, the company already operated a large Azure data platform integrated into existing business processes. The focus was on rapid integration into the existing environment.

Solution approach

Results & trade-offs

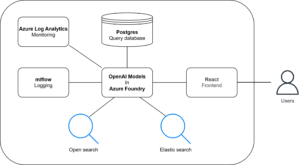

Figure 3: Schematic overview of the system architecture in Azure.

Users interact with the GenAI chatbot via a frontend implemented in React. OpenAI models are managed through Azure Foundry, while MLflow logs all LLM calls. User requests are stored in a Postgres database. Hybrid document search combines Elastic Search (for keyword search) and OpenSearch (for semantic search). The entire platform is monitored with Azure Log Analytics.

This case illustrates how different organizations address the tension between cost, scalability, and regulatory requirements – from minimalist lakehouses to highly scalable real-time platforms.

Example C: A Minimalist Lakehouse

Initial situation / context

A financial institution subject to strict regulatory requirements placed the highest value on digital sovereignty. The goal was to build a robust data platform that would remain compliant yet future-proof.

Solution approach

Results & trade-offs

Figure 4: Schematic overview of all system components.

The Data Processing App (center) contains an orchestrator module that receives commands and file paths via a message bus (top). It retrieves data from distributed storage (left) and performs a series of transformation and validation steps using DuckDB and Arrow, executed on OpenShift. Intermediate results are stored in distributed storage (bottom). The final output is written to a relational database and stored as Parquet files for distribution. A REST API server, along with direct ODBC/JDBC or file access, provides access to the processed data.

Example D: Real-Time Processing in the Production Plant

Initial situation / context

A manufacturing company wanted to process production data in real time to optimize operations. Innovation speed and scalability were the main focus.

Solution approach

Results & trade-offs

Figure 5: Schematic overview of the real-time data architecture.

Confluent Kafka (left) continuously captures sensor data from the plant and feeds it into the data platform. Databricks streaming jobs transform incoming data – performing matching, validation, enrichment, and contextualization – and write results to Delta Tables (top right). Some processed data is also stored in a PostgreSQL database (bottom right). Azure Data Factory orchestrates Apache Spark batch jobs (top left), including the retrieval of master and contextual data from Azure Data Explorer and the maintenance of Delta tables. Power BI and Azure ML Service (right) access platform data for further analysis and visualization.

The case studies illustrate that there is no single correct architecture. Each represents a different set of priorities:

All solutions are valid – because they reflect each organization’s context and value creation logic.

Digital sovereignty does not emerge from dogmatic technology choices, but from deliberate trade-offs. In this article, we have presented five dimensions for structured decision-making: control, compliance, scalability/availability, cost, and speed of innovation.

Our case studies demonstrate that sovereignty does not mean maximum independence, but rather informed freedom of choice. Every architecture involves dependencies – the key is to enter them consciously and align them with the organization’s strategic goals and operating conditions.

This is exactly where HMS comes in: we help organizations map their requirements transparently, define clear priorities, and develop architectures that balance security, speed, and future readiness – enabling true digital sovereignty.