This is the second article of our HMS article series to Model Context Protocol (MCP), providing a deep dive into its structure and concepts.

We break down the roles of MCP host, client, and server, explore how capabilities like tools and resources are exposed, and look at how these components interact in typical workflows. The article also covers core protocol primitives, transport mechanisms, and security considerations.

If you haven’t read our first article yet: MCP: Scalable and Standardized LLM Integration for Enterprises

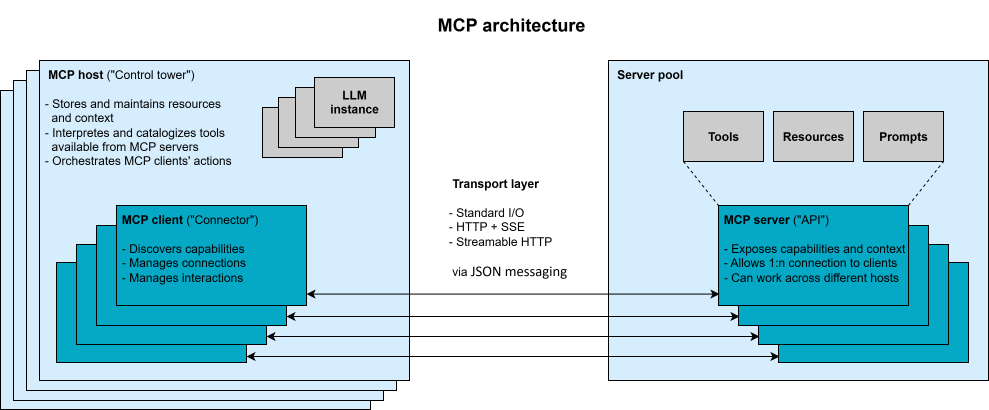

MCP uses a client–server architecture with three key roles:

Learn how MCP works: roles of host, client & server, core primitives, transport layer, and security for enterprise LLM workflows.

The MCP host can connect with multiple MCP servers in parallel, with each server managed by its own client. This modular architecture ensures clear separation of responsibilities and supports sandboxing and controlled access, so that the host e.g. can enforce user approvals between the LLM and any server interaction.

At least one LLM instance operates within the MCP host and acts as the consumer of the context. Through the host, the instance(s) can trigger MCP clients to invoke tools, or access resources and prompts provided by an MCP server. However, the LLM does not occupy a defined role in the MCP specification and functions as part of the host rather than a distinct protocol component.

From the perspective of agentic systems, the tasks of the MCP host and the agent are very similar. Depending on the use case and interpretation, the terms "MCP host" and "agent" may be used interchangeably. Alternatively, they can be treated as distinct components: The host functions as the user-facing application wrapper that provides the MCP infrastructure. The agent, in supplement, encapsulates the application logic acting as an intelligent orchestrator that interfaces with the LLM and manages client interactions. This design allows for multiple agents to run concurrently within a single host, each treated as a distinct instance with its own context and resource scope, potentially working together. Regardless of the interpretation, the agent always operates on the host side of the protocol and, like the LLM, does not occupy a defined MCP role.

Communication within MCP is handled through a transport layer based on JSON-RPC 2.0. This enables structured messaging over stateful connections between clients and servers. MCP supports both local and remote transport mechanisms for MCP servers running in local or cloud environments, respectively. For local messaging, Standard Input/Output (STDIO) is simple and effective. For remote integration, the protocol supports HTTP using Server-Sent Events (SSE) or Streamable HTTP. Although the latter will be recommended for remote connections in future, it is not yet fully supported across all currently available MPC Software Development Kits (SDKs). In addition to a fast-growing number of SDKs from various sources, Anthropic itself offers official SDKs for different programming languages.

During operation, MCP clients and servers exchange requests, responses and notifications. Each server declares its available set of capabilities via a JSON Schema, e.g. a cloud storage server might offer tools such as “search” or “duplicate_file”. This setup supports a turn-based, conversational process of interaction: The LLM communicates in natural language, but under the hood it calls tools and accesses resources as needed and in a standardized way.

We will look at this again in a following chapter of this article about a workflow example.

MCP introduces three primitives (modes of functionality) that MCP servers offer and expose:

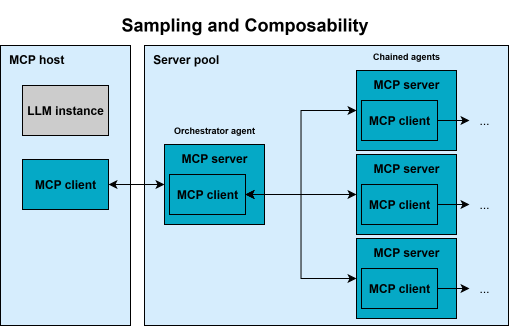

Diagram of MCP composability: how servers, clients, and hosts interact with LLMs, featuring sampling for multi-agent workflows.

An individual MCP server can expose any combination of these primitives, ranging from specialized servers focused on a single primitive up to monolithic servers offering a wide range of functionalities. As soon as the MCP server and the LLM are planned to interact automatically, a model-controlled primitive should be available for usage.

These primitives are supplemented by communication features targeting the flow between client and server (vice versa) enabling additional use cases:

Together, these primitives and features let MCP define rich interfaces: data, actions and interactive guides are offered (resources, tools, and prompts) as well as dynamic behaviors (roots, sampling, elicitation) offered in a standardized JSON-based protocol.

This is completed by the concept of composability, meaning that an MCP client could itself act as a server and vice versa, shown in the figure above. Together with the sampling idea, this enables chaining of MCP servers, empowering multi-agent topologies. This composability is part of MCP’s vision for a modular ecosystem.

For more information about the described core concepts and to keep up-to-date with new developments, see Anthropic’s specification page. For an overview on clients supporting these core concepts, see Anthropic’s feature support matrix.

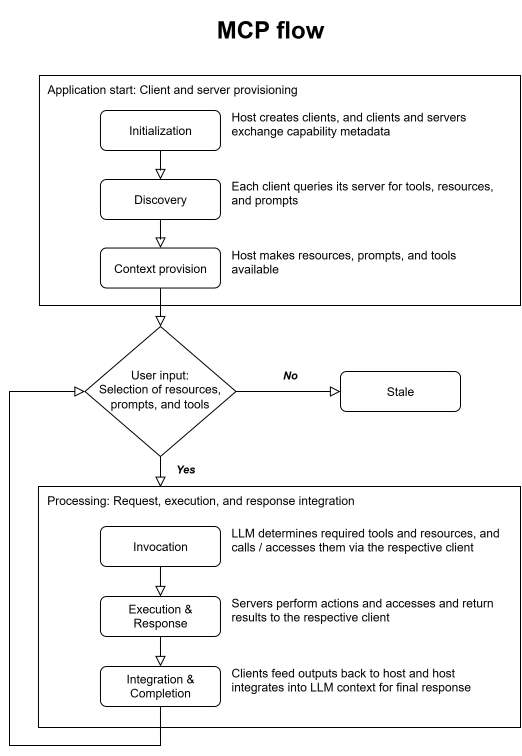

To show and explain how MCP can integrate into an application workflow, let’s imagine an exemplary RAG (Retrieval Augmented Generation) application which follows the steps and flow shown in the figure below.

When the application starts, it creates multiple MCP clients. Each client initiates a handshake with a corresponding server to exchange information about supported capabilities and protocol versions. This handshake ensures both sides are compatible and ready to communicate effectively.

Following initialization, each client sends a discovery request to its connected server to determine what specific capabilities are available. These capabilities might include tools, resources, or prompts. The MCP server responds with a structured list describing each of these elements, which the client relays to the host. The MCP host can then interpret this information and prepare it for use.

Once the discovery is complete, the host application translates the available capabilities into a format suitable for interaction with an LLM. This might involve parsing tool definitions into JSON schemas for function calling or organizing resources and prompts in a way that the LLM can reference them during inference.

Flowchart of the MCP workflow: initialization, discovery, context provision, invocation, execution, and integration of responses.

The application is now prepared for user interaction. When a user inputs a query such as “What are the open issues in the HMS-GitHub repository?”, the LLM analyzes the request and determines that invoking a specific tool or accessing a resource is necessary. It identifies the appropriate capability, and the MCP host instructs the correct client to send an invocation request to the server responsible for that tool.

The MCP server receives this invocation, such as a “fetch_github_issues” request with the parameter “HMS”, executes the underlying logic (e.g., makes an API call to GitHub), and processes the result. Once the operation is completed, the server sends the response data back to the MCP client.

The client then forwards this result to the MCP host, which integrates the information into the LLM’s context. Any further LLM reasoning continues with this augmented context. Using the updated and relevant data, the LLM generates a response that reflects both its internal reasoning, and the fresh external information just retrieved.

Such an MCP flow allows the LLM to remain grounded in live data, access specialized capabilities as needed, and deliver rich, informed responses to users. The separation between MCP host, client, and server ensures modularity, scalability, and flexibility in deploying and maintaining various tools across different environments.

Like any powerful new technology, MCP brings new security challenges that are still being addressed. Because MCP servers often hold privileged access (e.g. API keys, database credentials) or data, they become high-value targets.

One possible approach could be token theft: an attacker who compromises an MCP server could steal OAuth tokens and gain “keys to the kingdom”, e.g. full access to a user’s email, files, and cloud services, all without triggering normal sign-in alerts. An exploited MCP server could perform unauthorized actions across all connected accounts. Similarly, a compromised MCP server could alter its tools post-install: a fake “weather” tool could update itself to exfiltrate data unless versioning and integrity checks are in place.

Another risk is “confused deputy” authorization: if a server executes actions on behalf of the LLM, it must enforce that it’s doing so with the right user permissions. Current MCP spec uses OAuth2.1 for remote authentication, but it is noted that parts of the specification may conflict with enterprise best practices. Anthropic and the community are already updating the specification to strengthen authorization and credential handling.

MCP also introduces novel LLM-specific vectors. For example, prompt injection becomes more dangerous: if a user (or attacker) feeds a malicious prompt to the LLM, it could trick the system into calling tools unexpectedly. Even without malicious intent, such a prompt might cause unintended actions (e.g. adding a stealthy user to a cloud account), see for example this incident.

To mitigate these issues, there are several recommended best practices:

In response to these concerns, the MCP roadmap includes adding features like an official Registry for discovering and centralizing validated remote servers, Governance mechanisms for transparent tracking of standards, and Validation tools for test suites to verify compliance. These will help the ecosystem mature with security in mind.

MCP defines a modular protocol that allows LLM applications to interact with external data, tools, and prompts through a structured host–client–server architecture. Hosts manage context and control execution, clients handle protocol messaging, and servers expose capabilities. MCP’s primitives (resources, tools, and prompts) support grounded and extensible LLM workflows, while additional features like sampling and elicitation enable dynamic interactions.

The protocol’s transport is based on JSON-RPC 2.0, with support for both local and remote servers. Security remains an active area of development, especially around token handling, tool invocation controls, and defenses against prompt injection. Best practices include sandboxing, least-privilege access, and audit mechanisms.

Organizations looking to apply MCP should begin by experimenting in controlled environments before moving too fast into production. Given its ongoing evolution, it is important to stay up to date with changes, as new features continue to make the protocol more powerful and robust. For decision-makers and architects, MCP deserves close attention since it addresses real challenges in LLM adoption.

By building MCP-compatible systems, businesses can ensure their LLM applications remain flexible and future-proof. In short, MCP aims to be the backbone of the next generation of LLM-powered systems. As the ecosystem matures, MCP is becoming a foundation for secure, modular, agentic applications across both local and cloud environments.

In the next article of the HMS series to MCP, we will conduct a little reality check. You will see how we, the HMS team, are already applying MCP to streamline operations, to implement or enhance customer applications, and in the end to deliver smarter services. Expect practical insights, code snippets, and even a glimpse into our proprietary code migration tool and some customer projects.

Explore successful implementations in our project references.